In 2004, I was mad. Sitting in my first journalism class in college, my professor explained how manual typesetting worked. Why, I thought, was my dad paying $20,000 per year for me to learn a long-obsolete technology?

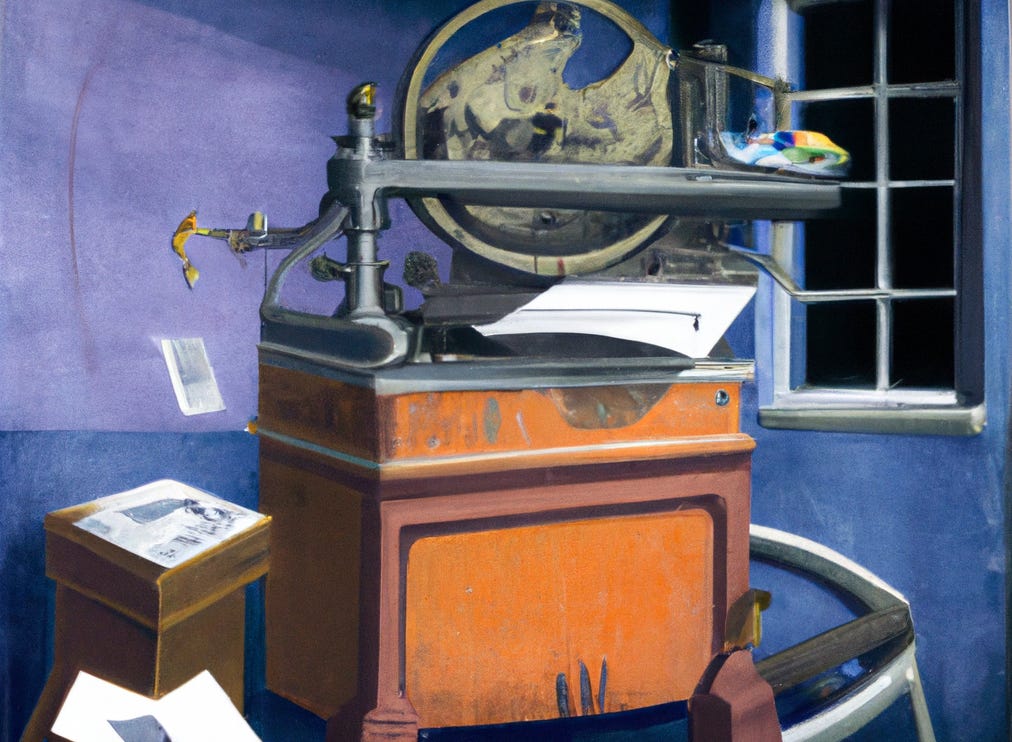

For those unfamiliar, “sharing” written “content” before the internet required machines that would squirt or press ink onto pieces of paper. The OG machine was the printing press. Writers would carve a page of text out of a sheet of metal that they’d cover with ink and then stamp onto each piece of paper. Want a publication with more than one page? Carve another sheet of metal.

At some point, someone figure out that it’s more efficient to carve stamps for each letter and then arrange them in order for each page of text. “Movable type” made publishing way faster, cheaper, and easier.

That’s what I thought of while reading this:

Author Noah claims that one day ChatGPT will be able to outperform the very best lawyers, journalists, scientists, writers, researchers, engineers, programmers, analysts, designers, filmmakers, and architects. And he’s worried intellectuals’ lives will be meaningless once ChatGPT is the most talented intellectual around.

There are only two problems with this thesis. First, Noah is wrong about AI taking the very best intellectuals’ jobs. Second, he’s wrong about meaning.

Look, man. I’m just a silly little Alabama girl typing away at her online diary. Far be it from moi to opine on what comprises an intellectual as if I have any authority to speak on the matter.

But, when has that ever stopped me before?

What I want to say is one of those ideas that’s like… it kind of feels so obvious to me that it’s slightly difficult to put it into words.

First, let’s define the thing Noah thinks is going to be automated, which we’ll call “intellectual output.”

This is essentially knowledge and ideas. Intellectuals create and distribute the knowledge and ideas that change the world (ideally for the better, but not always).

The history of technology involves a lot of automating up the stupid-chain. Back in the day, the writer often had to manually set movable type if they wanted to get their shit out there.

Today, intellectuals usually don’t have to do anything as rote and time-consuming as setting movable type to create and distribute their ideas and information.

But, in my humble opinion, intellectuals today still spend 99% of their time doing what I would describe as SHITWORK.

To illustrate my point, let’s look at three intellectual jobs Noah thinks will be fully automated by AI: lawyers, policy analysts, and researchers.

The vast majority of legal cases are decided based on three questions I could easily see an AI answering more accurately than the best human lawyer or judge:

Which laws apply here?

What case law is applicable?

What’s the probability both sides are accurately representing reality?

So it seems likely to me that within 20 years, most lawsuits will be fully automated.

Let’s look at another example, from Ezra Klein’s great recent piece on the CHIPS Act and its child care requirement. Today, most policy analysts essentially estimate how many chips the US would likely manufacture with and without the child care requirement. One day, AI will produce an estimate that is faster, cheaper, and more accurate than any human’s.

Let’s look at another example. Right now to run a study a researcher usually has to do all or most of the following tasks:

Come up with a question/hypothesis

Secure funding

Get access to the shit they’re going to study

Run the study

Do the math to see whether the results are noise

Write up the study

Submit it to reviewers

AI is going to complete most of those tasks faster, cheaper, and better than human researchers today.

So far, it sounds like Noah and I are in agreement. But we’re not. Because I’m saying “most,” while he’s saying “all,” including “the best.”

But he’s missing what makes an intellectual an intellectual. You have to be smart, yes, to do intellectual shitwork. Dummies usually can’t determine which laws apply to a particular case, estimate how a child care requirement will impact chip production, or write a winning grant. Guess what? In 1297 dummies couldn’t set movable type either.

But shitwork is still shitwork, even if it require smarts.

Intellectual work is:

Deciding whether there’s a compelling reason not to defer to existing case law.

One day, AI will be able to tell us how challenging versus hewing to existing case law will likely impact anything we might be able to measure — Economic growth, air pollution, average IQ, whatever. What it’s hard for me to imagine it doing is deciding whether something is “fair.”

Deciding which outcomes to prioritize in a policy debate

I can’t imagine an AI deciding whether guaranteeing some percentage of female welders don’t have to worry about getting a babysitter in order to come to work is worth building fewer chips in the US.

Deciding which questions/hypotheses to test.

AI will be able to tell us whether legalizing density alleviates or worsens loneliness on average. But it’s unlikely to come up with the question.

THIS KINDA SHIT, MY BABIES, IS WHAT AN INTELLECTUAL DOES.

I’m going to make a prediction that I think encapsulates my argument: Within 20 years, people are going to look back on how intellectuals spend the vast majority of their time with the same head-shaking sadness we now feel when we think about a brilliant writer having to spend time setting their own type.

I’m going to say something sassy. If you can imagine a world in which intellectuals run out of work to put AI to, questions to ask, optimizations to seek, information to obtain, then you’re not the kind of person who will be considered an intellectual in 20 years.

And that’s fine! When photography became cheap, a shit-ton of illustrators lost their jobs. All of them adapted their style, moved into a different line of work, or died.

The exact same thing is going to happen to intellectuals. Because 99% of the time required for intellectual output right now goes into SHITWORK, we currently employ a lot more intellectuals than we’re going to need later.

If you’re a researcher who’s really good at finding subjects or writing grants but not so great at coming up with ideas to test, you’re going to be out of work. You’ll either find new work or hopefully do something else because no one will have to work to survive by then.

Here’s where it gets really interesting, at least to me.

AI is probably eventually going to do all those “intellectual” tasks too.

I can easily see a world where humanity has broadly agreed on something to optimize for. Call it “safer” or “fairer” or “more interesting.” As long as we have ways to measure those outputs, AI will be able to come up with and test all sorts of ideas for maximizing that return.

But who decides whether to optimize for safer or more interesting? What we want the world to look like is fundamentally, I believe, an aesthetic determination. And that’s where it’s very hard for me to imagine AI outperforming humans, at least by human standards. Because we set the standards. We are the standards. The question at the heart of every question when you dig down deep enough is: What feels good to me? What feels good to other humans?

As long as humans crave novelty, answering this question will occupy intellectuals as long as humanity exists.

I’m not at all worried about not having anything to do once AI takes over because here’s my scandal: I think I’m not very bright but extremely intellectual. I am not smart enough to do 99% of intellectual shitwork. I don’t memorize well enough to be good at case law. I don’t math well enough to get a job at a think tank doing policy work or at a school doing research.

Hell, half the time I’m too impatient to proofread this newsletter and never smart enough to always write it correctly the first time.

But you know what I am really fucking good at, in my opinion? Asking good questions. When AI takes over and I don’t need to have memorized case law or learned how to use stats software to answer hard questions and a robot is proofreading my shit for typos I’m going to be busier than fucking ever. As will a lot of people.

Okay, now for the more important part.

I’ve written about this a lot already, so I’m not going to dwell on it here, but for most people most of the time, meaning comes from relationships, not intellectual output.

And I have absolutely no reason in the world to believe intellectuals are any different.

Guess what people have more time for when they don’t have to do SHITWORK? Connecting with other humans.

Sex and the State is a newsletter at the intersection of policy and people. Like it? Upgrade to a paid subscription, buy a guide, follow me on Twitter, support me on Patreon, or just share this post 🙏

~~~~~

This ⬇️ is an affiliate link! Sign up today to support me!

Join the reading revolution! Get key ideas from bestselling non-fiction books, distilled by experts into bitesize text and audio. Explore our vast library of over 5,500 titles and stay up-to-date with 40 new titles added each month.

Very on point!